Energy-efficient computing

Ok, so here’s yet another set of benchmarks on the new M2 Pro CPU. Apple has doubled the number of efficiency cores from 2 to 4 in the M2 family and this caught my interest.

The benchmark is a compilation job using clang 14.0.0. Let’s

compile PostgreSQL 15.11 from source and time the make-step. I’m

running this on a 14" MacBook Pro with the low-end (10 core) M2 Pro.

I’m interested in energy consumption. How can we measure that? It turns out

macOS comes with a nice command, powermetrics, that can give you the

current power draw of the M-family SOCs down to the single components:

CPU, GPU and ANE2 (the inference co-processor). Unfortunately there is

no package power output (anymore), so I suppose RAM power is not included.

This handy little script writes out the timestamp and CPU power each second:

#!/bin/bash

# log epoch and CPU power [mW]

# (call with sudo)

powermetrics --samplers cpu_power -i 1000 \

| grep --line-buffered "CPU Power" \

| while read A; do

echo -n $(date +%s) " "

echo $(echo $A | tr -c -d '0-9')

done

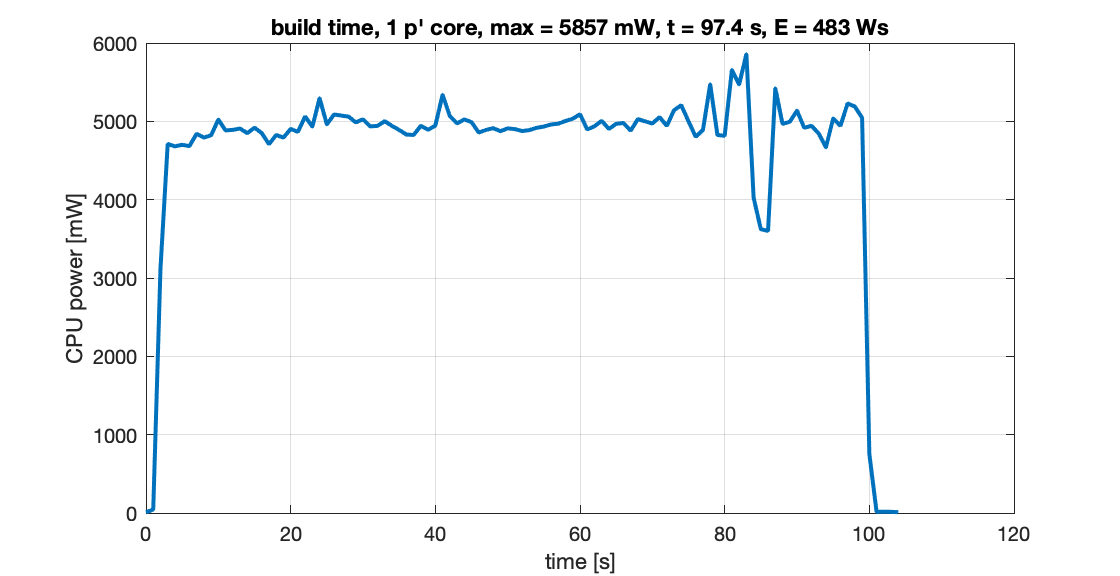

So, without further ado, here’s the make -j 1 step, pegging 1 normal (performance) core:

OK, this takes 97.4 seconds. The power usage is mostly constant between 5 and 6 W, in line with what was generally benchmarked by others.

Idle CPU power usage is something like 10-20 mW, so let’s ignore that and integrate to get the overall energy consumption3: 483 Ws (or Joule, if you wish).

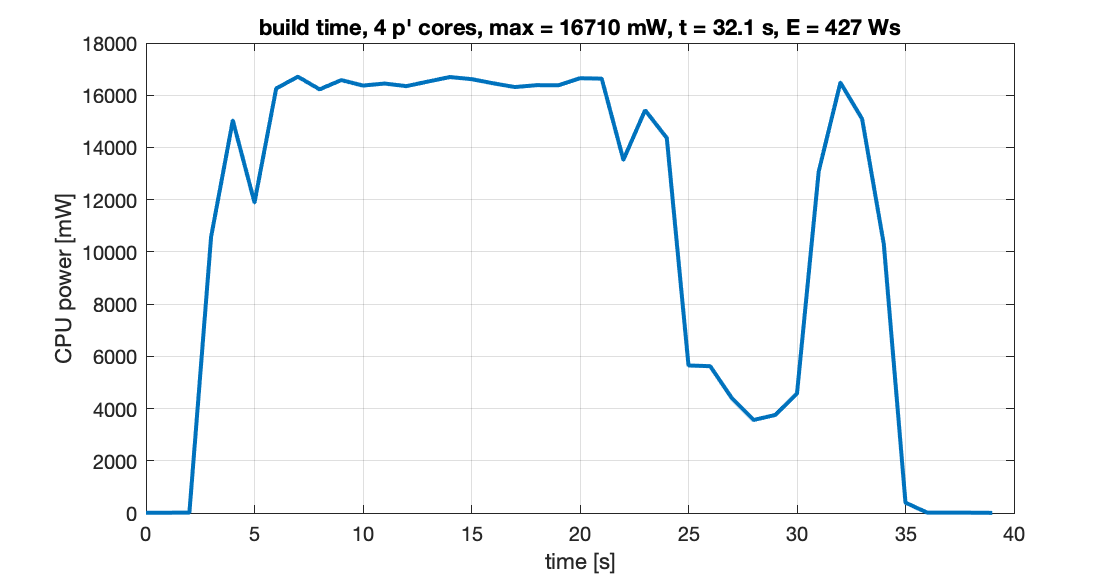

Let’s use up to 4 performance cores now, with make -j 4:

Power usage raises, time goes down to 32.1 seconds. Parallelism is not 100% efficient, due to some sequential step that also shows on the graph (linking, likely, or compilation of things in a dependency chain). So that’s 3.0 times faster for 4 times the cores. Interestingly, overall energy consumption is just a bit lower at 427 Ws vs 483 Ws. Basically the same.

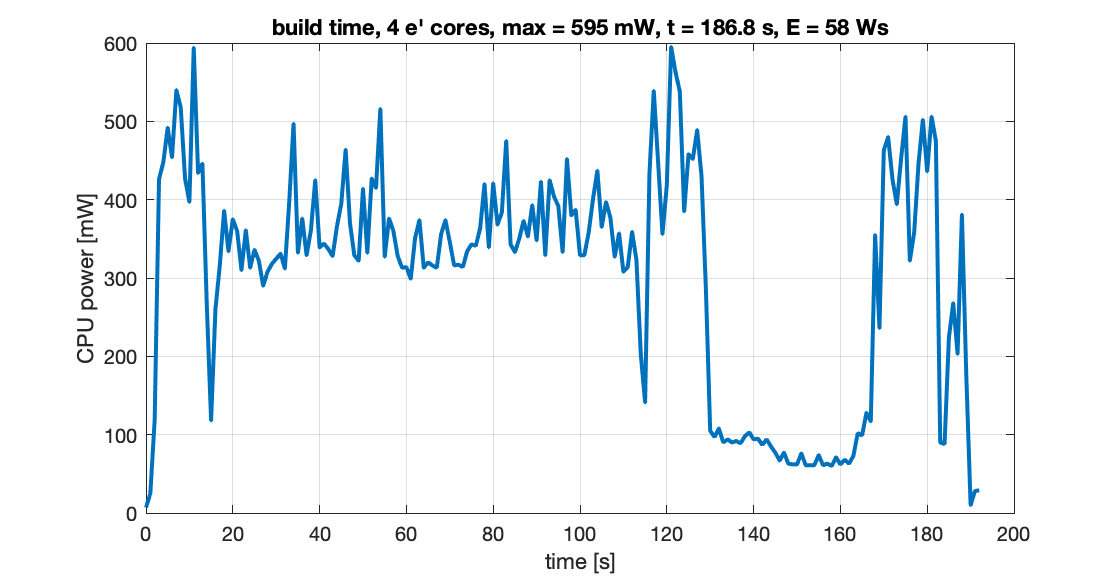

But, bear with me: let’s run the compilation on the efficiency core. We can do this using the command:

taskpolicy -c background make -j 4

This “nices” the compilation so to make use of only the efficiency cores! Here we go:

If we compare the 4 core runs, that’s 186.8 s vs 32.1 or 5.8 times slower. However, the whole task costs as 58 Ws vs 427 Ws! So even though it took much longer, the power draw was so low that the job was completed with just 14% of the energy consumed by the previous run.

I wonder why efficiency cores are not yet available on servers? Electricity and cooling are major cost factors in data centers, while there’s huge amount of batch processing where cost is more important than speed.

Extrapolating these findings and ignoring all costs except CPU energy usage, it is as if I had the choice between paying 1 EUR to have a job complete in 1 minute or just 14 cents to have it completed in 6 minutes.

Usually we think about costs per minute, so translate this as paying 1 EUR per minute for 1 minute vs. paying a bit more than 2 cents per minute for 6 minutes. That would be a huge cost saving for batch processing!

Where are the server chips with efficiency cores in the clouds where I rent my VMs?

PS: if you’re curious, make -j 10 takes 22.0 seconds, draws a maximum power

of 24.2 W and consumes 357 Ws.